Geoscience in the Digital Age: Modern Research Methods Using Laptops

Modern geoscience faces the challenges and opportunities of the digital era. One of the key tools that is revolutionizing research in this field is laptops....

Exploring the Intersection of Technology and Geoscience: A Journey into Agile Geoscience

I. Introduction A. Overview of the evolving landscape of technology B. Introduction to Agile Geoscience and its pioneering efforts in leveraging technology II. Agile Geoscience:...

Mystery Unveiled: The Disappearing Lake Phenomenon

Disappearing lakes are fascinating natural events, not just tricks or magic. They happen because of specific conditions in the environment. This phenomenon occurs in places...

The Biography of Joseph Fourier

Joseph Fourier, the revered mathematician born on March 21, 1768, in Auxerre, France, etched an enduring legacy in the annals of mathematical analysis. His profound...

Sea Scales: Unveiling Oceanic Mysteries

The ebb and flow of sea levels create an intricate dance influenced by an array of factors. Beyond the familiar impact of glaciation on polar...

Unveiling Seismic Processing Software Dynamics

Seismic processing software has become an indispensable tool in the field of geophysics, enabling researchers and professionals to analyze seismic data with unprecedented precision and...

Unveiling Julia: The Rising Star in Scientific Computing

Julia, a programming language that has been making waves in the scientific Python community, is gaining significant attention for its unique capabilities and performance. While...

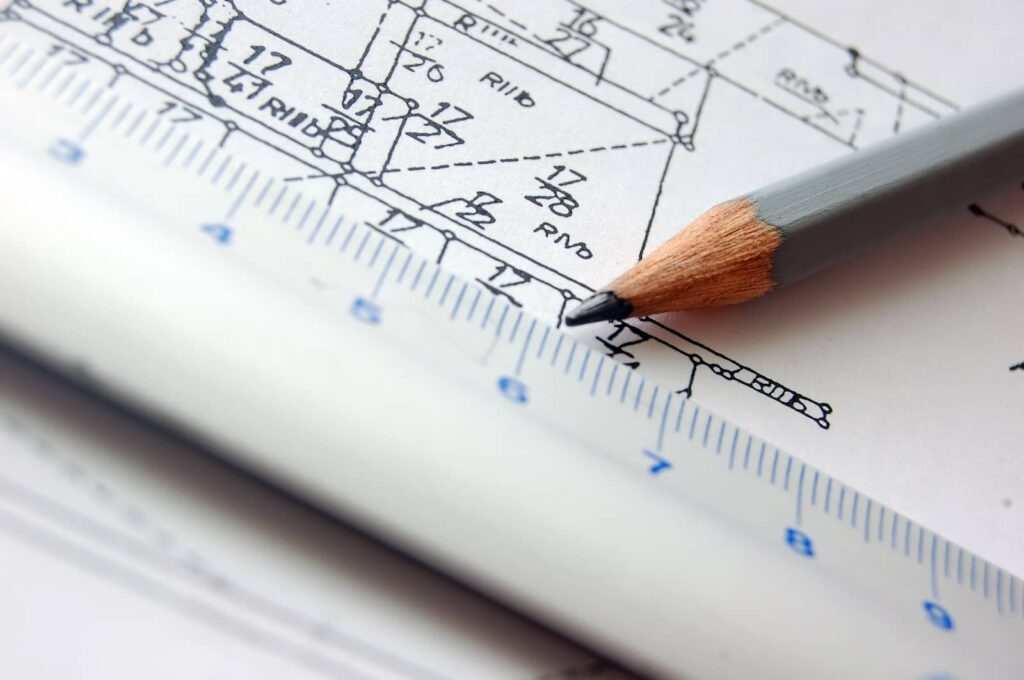

Exploring Geological Modeling: Beyond Blueprints

The world of geological modeling often walks hand in hand with investment, sharing a common trajectory and objective. Geological modeling is an intricate discipline that...

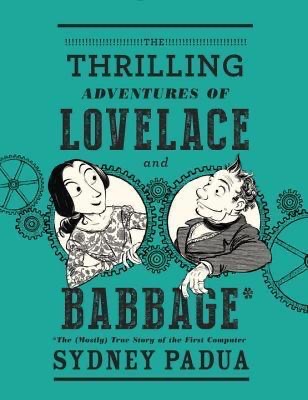

Exploring Science Through Comics: 5 Captivating Reads

Comics and graphic novels have a unique way of blending storytelling with visual art, making complex subjects more accessible and engaging. In this article, we...

Unleash Opportunities with the Geo ToolBox

Some enterprises might initiate their journey by amassing a considerable amount of funding. However, our philosophy fundamentally revolves around operating within our monetary capabilities. We...